Amazon Athena is a serverless, interactive query service that allows users to analyze data stored directly in Amazon Simple Storage Service (S3) without the need to move it. By utilizing standard SQL, Athena allows users—especially those familiar with SQL syntax—to run ad hoc queries efficiently, providing quick results. Additionally, Athena integrates seamlessly with Apache Spark, enabling advanced data analytics without the hassle of managing infrastructure.

How Amazon Athena Works:

- Querying Data: Users can point Athena at their data on S3 and run ad hoc SQL queries. This capability allows for quick data analysis without complex setup processes.

- Serverless Architecture: Athena is serverless, meaning users do not need to manage or provision infrastructure. This allows for automatic scaling and running of queries in parallel, ensuring fast results even for large datasets.

- Data Compatibility: Athena works with various data formats, including CSV, JSON, Apache Parquet, and Apache ORC, which support both structured and semi-structured data. This versatility enables comprehensive analysis without needing to preprocess data.

- Integration with AWS Services:

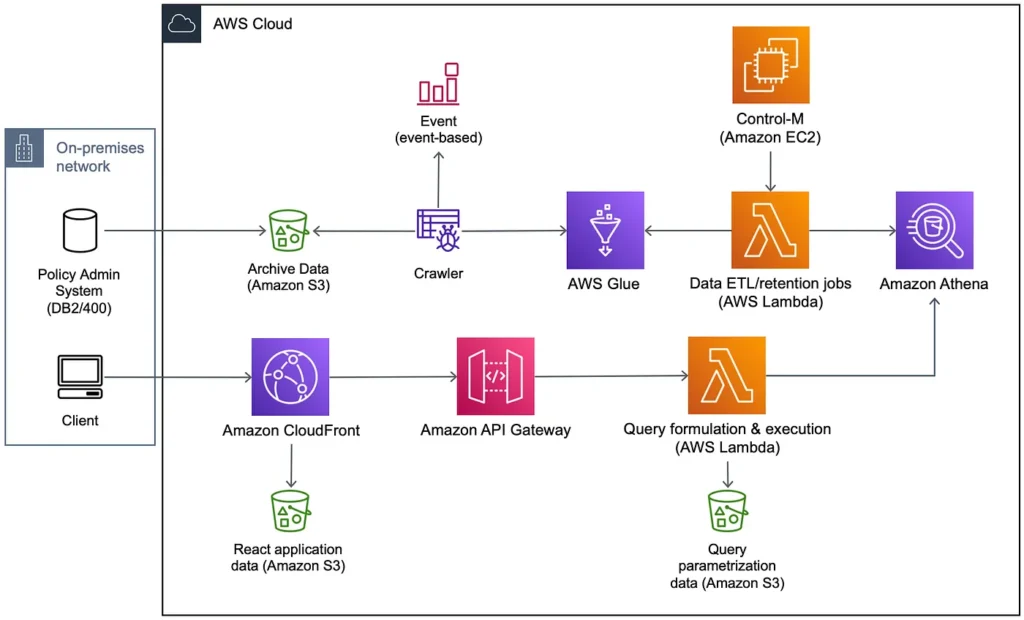

- AWS Glue: Athena integrates with the AWS Glue Data Catalog, which acts as a persistent metadata store, making data management and discovery seamless across AWS services.

- Amazon QuickSight: It also integrates with Amazon QuickSight, allowing for data visualization and exploration with business intelligence tools.

- AWS EMR and Spark: Users can leverage Athena within AWS EMR for interactive SQL analytics alongside Spark workloads.

- Cost-Effectiveness: You only pay for the queries you run, making Athena a cost-effective solution for data analysis. This economic model promotes efficient query execution without upfront costs.

- Interactive Analytics: The service is designed for speed and interactivity, enabling real-time data exploration directly on S3 without the need for data loading into another system.

💡 Why use Athena vs. alternatives?

Amazon Athena shines when you need ad-hoc or exploratory queries directly on data in Amazon S3 without setting up infrastructure. If you need a persistent data warehouse for complex analytics across structured data, Amazon Redshift is usually the better fit. For heavy batch processing, custom ETL pipelines, or large-scale distributed machine learning, Amazon EMR with Spark or Hadoop provides more flexibility and control. Together, these services complement each other, but Athena is often the fastest way to get insights directly from raw data.

In Amazon Athena, the hierarchy of objects is organized into catalogs, databases, and tables. Here’s how these components are structured:

- Catalogs: The highest level in the hierarchy, a catalog is essentially a data source that groups together multiple databases. In Athena, this is represented as the AWS Glue Data Catalog.

- Databases: Within a catalog, there can be one or more databases. A database serves as a logical grouping of tables. It holds metadata and schema information for the datasets it contains.

- Tables: Each database consists of one or more tables. A table is a container that defines the schema for the underlying data and holds metadata such as the location of the data in Amazon S3, the structure (column names and data types), and the table name.

Key Points:

- Glue Data Catalog: Athena uses the AWS Glue Data Catalog for storing and retrieving metadata. It integrates tightly with Athena, enabling efficient querying.

- Creating Tables: Tables must be created in Athena before querying data. This can be done either automatically (using an AWS Glue crawler) or manually (using the Athena console with Hive DDL statements).

This structure allows for efficient organization and querying of large datasets, as well as proper management of metadata through the Glue Data Catalog.

Hands-On with Amazon Athena: Insights and Learning

I am going to walk you through a demo project that will enable you to practice and master Amazon Athena.

Setting Up Athena: A Step-by-Step Overview

- Create an S3 Bucket:

The first step in using Athena involves setting up an S3 bucket to store the query results from processed queries. Log in to the AWS console, search for S3, and create a new bucket. Name it (e.g., athena-queries-12345) to avoid naming conflicts.

- Configure Athena Settings:

After creating the S3 bucket, open the Athena service in a new tab. Go to the settings and set the query result location to the S3 bucket you’ve just created. This step is crucial for storing the results of your queries.

- Database Creation:

The next step involves creating a new database. In the Athena interface, select AWS Data Catalog and proceed to create your database, which will act as a container for your tables and queries.

CREATE DATABASE mydatabase

- Table Creation and Data Catalog Integration:

Athena integrates with the AWS Glue Data Catalog, allowing for centralized metadata management and the easy creation of tables. By defining tables within your database, you can point Athena at your specific datasets in S3. - Next is to inspect some sample log data, which is provided by AWS. This data is available to everyone publicly available in each region. But we will be using the one available at us-east-1

s3://athena-examples-us-east-1/cloudfront/plaintext/

This DDL registers an external table in Athena that points to the data files in S3.

CREATE EXTERNAL TABLE IF NOT EXISTS cloudfront_logs ( Date DATE, Time STRING, Location STRING, Bytes INT, RequestIP STRING, Method STRING, Host STRING, Uri STRING, Status INT, Referrer STRING, ClientInfo STRING ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LINES TERMINATED BY '\n' LOCATION 's3://athena-examples-us-east-1/cloudfront/plaintext/';

Querying with Athena

Once the setup is complete, you can start querying your data with standard SQL commands directly in the Athena query editor. This interface provides a user-friendly environment for executing queries and analyzing results.

select case when clientinfo like '%IE/%' then 'Internet Explorer' when clientinfo like '%Chrome/%' then 'Chrome' when clientinfo like '%Firefox/%' then 'Firefox' when clientinfo like '%Safari/%' then 'Safari' when clientinfo like '%Opera/%' then 'Opera' else 'other' end as Browser, count(*) as RequestCount, sum(bytes) as TotalBytes from cloudfront_logs where date between date '2014-07-05' and date '2014-08-05' group by case when clientinfo like '%IE/%' then 'Internet Explorer' when clientinfo like '%Chrome/%' then 'Chrome' when clientinfo like '%Firefox/%' then 'Firefox' when clientinfo like '%Safari/%' then 'Safari' when clientinfo like '%Opera/%' then 'Opera' else 'other' end order by RequestCount desc

After running this query, you will see the result under query results

Additional tip

You can decide to check your recent queries, and you can see that as well in the Athena Query Editor under the Recent Queries tab.

Best Practices for Cleaning Resources After Using Amazon Athena

After working on hands-on demos with Amazon Athena or any other AWS services, it’s essential to clean up resources to avoid unnecessary charges and clutter in your AWS account. Here are some pro tips and best practices for resource cleanup:

Remove Tables and Databases in AWS Glue After Using Athena:

After completing your hands-on demo with Amazon Athena, it’s essential to clean up resources to avoid unnecessary costs and clutter. If you have created tables and databases in Amazon Athena, it can be deleted from the AWS Glue service, not directly from Athena. Here’s a detailed guide on how to remove them effectively:

- Navigate to the AWS Glue console.

- Go to the “Data Catalog” section and click on “Databases”.

- Select the database you created for the demo practice and click on “Delete.”

- Confirm the deletion.

database deletion on AWS Glue

Delete Unused S3 Buckets:

- If you created an S3 bucket solely for the demo, ensure you empty and delete it after your work is done. Navigate to the S3 console, select the bucket, and choose the delete option. Confirm the deletion and be sure to check for any remaining data.

emptying the S3 bucketS3 bucket deleted successfully

Document Your Resources:

- Keeping a record of resources created during the demo is beneficial. Document which resources were made and what the plans for them are. This practice helps in effective cleanup and smaller bill surprises later.

Utilizing Athena for Data Analysis

With Athena, you can analyze various data formats, including CSV, JSON, Apache Parquet, and ORC, without needing data movement. Queries can be run directly against the datasets stored in S3, providing immediate insights. The serverless nature of Athena means you only pay for the queries you run, making it a cost-effective solution for many use cases.

Conclusion and Learnings

Through hands-on experience with Amazon Athena, I have gained valuable insights into serverless data analysis, the ease of querying data stored in S3, and the importance of efficient data cataloging. This journey has not only expanded my understanding of data analytics but also provided practical skills in utilizing cloud solutions for real-time data exploration.

Athena’s capabilities can revolutionize how businesses interact with their data, highlighting the advantages of serverless architectures in modern data analytics.

📚 References & Further Reading

- Amazon Athena — User Guide — Official documentation covering overview, features, and getting started.

- Get Started Tutorial — Step-by-step guide on creating tables and running sample queries in Athena.

- Amazon Athena Pricing — Explains $/TB scanned pricing and Spark DPU pricing for Athena.

- Use AWS Glue Data Catalog with Athena — Learn how Glue integrates with Athena for schema metadata and crawlers.

- Query Amazon CloudFront Logs with Athena — Example DDL and queries for CloudFront logs.