In a world where AI is evolving faster than our tools can keep up, developers are hitting a familiar wall: integrating powerful language models into real-world tools and data systems is still clunky, ad hoc, and inconsistent. Despite all the recent breakthroughs in Generative AI, most of us still rely on messy glue code, brittle APIs, and custom adapters to connect our agents to real-world context. It’s frustrating—and it’s slowing innovation down.

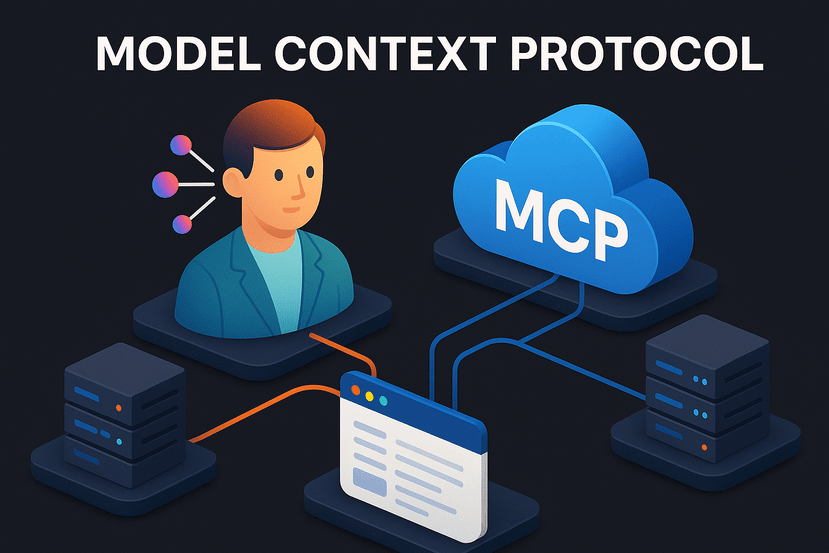

That’s exactly the kind of friction the Model Context Protocol (MCP) was created to eliminate.

Born in late 2024 and spearheaded by Anthropic, MCP isn’t just another spec—it’s quickly emerging as the de facto open standard for connecting large language models (LLMs) to external tools, workflows, and data sources. Think of it as the “USB‑C for AI agents.” Just as USB-C standardized how we plug devices into laptops and phones, MCP standardizes how models interact with everything else: your codebase, your calendar, your CRM, your file system, your favorite DevOps tool. In this deep dive, we’ll break down what the Model Context Protocol (MCP) actually is, why it matters, what problems it solves, and where it’s already making a difference in real-world AI ecosystems.

What is the Model Context Protocol (MCP)?

At its core, MCP is a model-agnostic client-server protocol that allows AI applications (the “hosts”) to communicate with external “context providers” (the MCP servers). These servers expose tools, resources, and prompts that a model can use to reason more effectively, act on the user’s behalf, or generate more informed outputs.

Put simply, an MCP server is like an API that lives in your local machine or remote infrastructure and tells the model, “Hey, here are the files you can read, here are the actions you can perform, and here’s some guidance you can use.”

It works over simple protocols like JSON-RPC over STDIO or HTTP, making it accessible, lightweight, and easy to implement. With SDKs available in Python, JavaScript/TypeScript, C#, Rust, Kotlin, and more, developers can spin up an MCP server in minutes and immediately start enhancing the contextual power of any AI model that speaks MCP. This includes not only Anthropic’s Claude but also models from OpenAI, Google DeepMind, Mistral, and open-source providers integrating the standard into their agents.

Why Was MCP Created?

Before MCP, connecting AI models to tools was like the early days of USB—every tool needed its own bespoke driver. Building a file reader for Claude? Write one integration. Need Slack support? That’s another one. Want to access GitHub or a database? More custom code, more brittle endpoints, more guesswork.

This created a huge M×N integration problem, where every new tool or model added complexity to the matrix. The result? AI agents were powerful in theory, but often blind and clumsy in practice. MCP was created to solve this chaos with one elegant principle: standardize the conversation between models and external resources. Now, instead of building direct integrations, developers expose tools via MCP servers. And any model that speaks MCP can discover and use them dynamically. This makes AI agents more flexible, adaptable, and—most importantly—more useful in real-world scenarios.

What Problems Does MCP Solve?

1. Too Many Custom Integrations

MCP abstracts tool access behind a single consistent interface. This allows developers to build integrations once and reuse them across many hosts and models, cutting dev time, bugs, and maintenance overhead.

2. Lack of Tool Discoverability

Most AI models today operate in a vacuum. MCP allows them to dynamically discover tools that are available in the user’s environment (or remotely), and then use them intelligently. It’s like giving your agent superpowers—and letting it choose what it needs based on the task at hand.

3. Inconsistent Security & Permission Models

Rather than leaking credentials or hardcoding API keys into prompts, MCP supports user consent, root scoping, and secure sandboxing. The host stays in control. And that’s critical for enterprise adoption.

4. Slow Agent Workflow Iteration

Every time you change a data source or endpoint in a traditional agent system, you’re probably rebuilding prompts, retooling code, and hoping nothing breaks. MCP removes that friction—tools and resources become pluggable, discoverable, and evolvable. That means faster iteration, faster time to value, and less tech debt.

Real-World Use Cases of MCP Servers

Coding Assistants

Tools like Cursor, Zed, and Sourcegraph Cody use MCP to power their AI code assistants. MCP servers provide live project context, documentation, test files, and even build scripts, allowing agents to give context-aware suggestions, refactor intelligently, and automate tasks like debugging or code generation.

Enterprise Agents

Companies like Block (Square) use internal MCP servers to expose business dashboards, customer support systems, and data warehouses to LLMs. This lets internal assistants answer questions, generate reports, or file tickets without needing dozens of fragile integrations.

Custom Developer Tools

Open-source MCP servers are popping up for everything from GitHub and Slack to PostgreSQL, Google Sheets, Hugging Face, AWS Lambda, and even Ableton Live. There’s now a full catalog on GitHub of community-contributed MCP servers you can start using today.

Personal Use

Even individual developers are spinning up MCP servers to allow models to read local files, search notes, browse photos, or automate tasks on their laptop—all from a single secure protocol.

How Does It Work?

An MCP session typically unfolds like this:

- The MCP host (AI model or agent) discovers one or more MCP servers.

- It sends a

listCapabilitiesorlistToolsrequest. - The server replies with JSON describing available tools, resources, and prompts.

- The model uses this metadata to decide which action to take.

- The host invokes a tool like

get_weatheror reads a resource likedocs/README.md. - The server responds, and the model incorporates the context into its reasoning.

All communication happens over JSON-RPC (2.0), and the model never gets access to more than it was explicitly given.

What About Security?

Security is a first-class concern in MCP’s design. Each connection can be scoped to a specific root directory (for local servers), and server discovery mechanisms prevent accidental connections to untrusted endpoints.

In addition, advanced frameworks like ETDI and MCP Guardian are already adding features like:

- OAuth-based identity verification

- Policy-based access control

- Execution rate limiting

- Audit logs

This makes MCP suitable not just for personal tinkering but also for enterprise-scale, multi-agent, production-grade AI systems.

The Future of AI is Modular, and MCP is Leading the Way

We’ve seen this pattern before in computing: complexity explodes, and we tame it with a protocol. HTTP for the web. SMTP for email. USB for hardware. Kubernetes for containers.

Now, MCP is doing the same for AI.

As agent-based systems grow in popularity, they need a way to safely and reliably interact with real-world context and tools. And they need to do it without every developer writing the same 15 glue scripts and 20 prompt hacks. That’s where MCP shines. It’s lightweight, elegant, secure, and developer-friendly. And it’s already here. Whether you’re building the next generation of code assistants, smart internal bots, AI devtools, or creative personal agents, MCP gives you a common language to connect everything.

Final Thoughts

The Model Context Protocol is more than just a spec. It’s an ecosystem-enabler. It’s an idea whose time has come. And it’s your agent’s new favorite way to interact with the world. So if you’re a developer, a startup, or an enterprise innovating with LLMs, get familiar with MCP. You’ll write less code, build smarter apps, and unlock capabilities that were previously locked behind complexity.

Ready to dive deeper? Check out the official docs: modelcontextprotocol.io

Or explore the MCP server catalog: github.com/modelcontextprotocol

Your agents—and your future self—will thank you.