When you hear the word platform engineering, the first word that comes to your mind is an IDP. Which, in most cases, stands for an Internal Developer Portal. I believe the “P” can still stand for a platform, but the market pushes the “portal” more due to the immediate value you visible via a Graphical User Interface (GUI). Before a portal GUI, a well-structured platform with an integration of tools and services needs to be set up. The platform components need to be reusable, hence implementing DRY at a very deep level. It is when the setups and configurations are easily pluggable that is when adding a Graphical User Interface would be much easier. How do you set up the infrastructure? Is IaC in use? Is it properly modularized? How are your pipelines written? Do you have a unique pipeline for every workload? Or is there a templated pipeline configuration within parameters to make it reusable across repositories?

These are the principal foundations of building a proper platform that is operationally scalable, resilient, and easy to manage in the long run. I shall go further to explain these foundational operations, principles, and tools that already exist and can use for these implementations. Let us begin with Infrastructure as Code setup standards.

Use the Github Template Repository

This involves setting up a boilerplate template code that has all the base requirements and configurations that your applications will need. Things like npmrc, Dockerfile, CI/CD configurations (eg, .gitlab-ci.yaml, .github folder), are all preconfigured on this template repository. So whenever a developer needs to create a new repo for an application, they do not have to start from scratch; instead, create a repository from this template repository, which will automatically have all the required files and configurations that are needed.

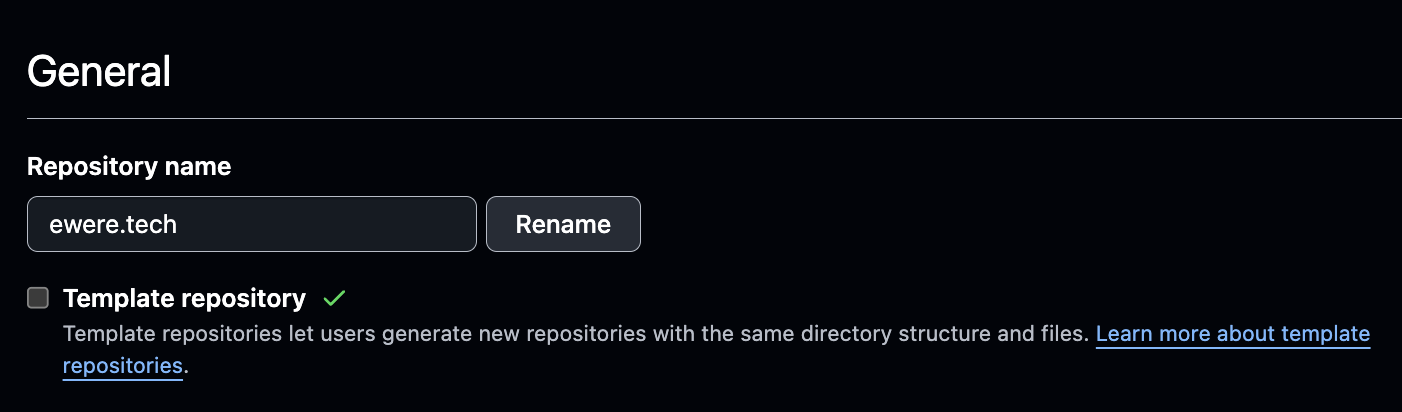

To make a repo a template in Github, click on the Settings tab of that particular repository and locate the Template repository checkbox and click it, as shown in the following screenshot.

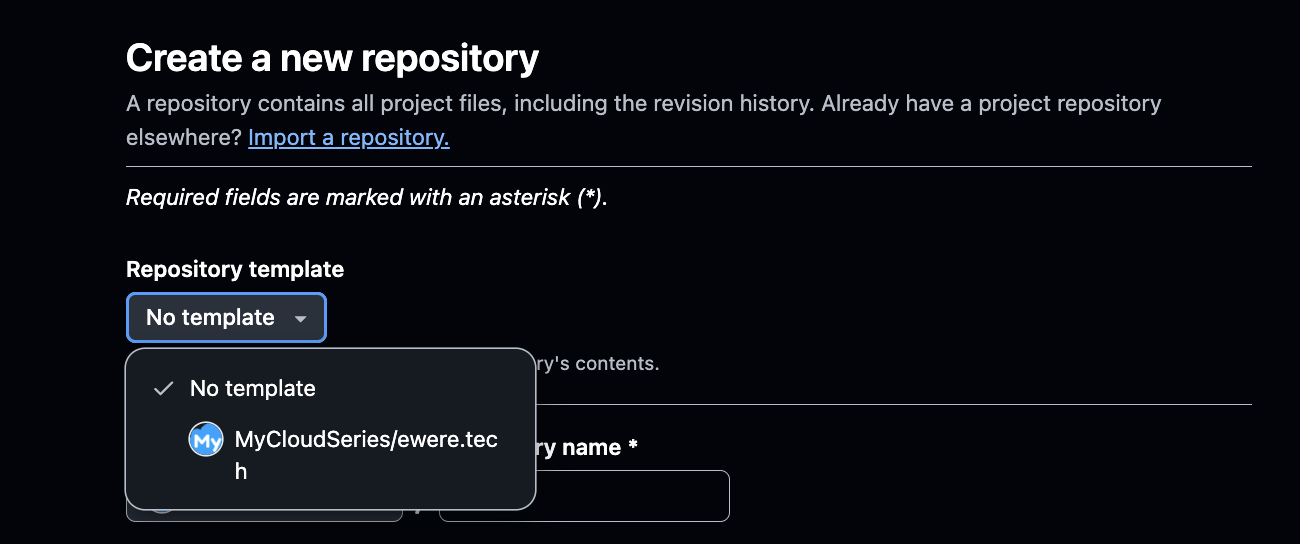

This saves time in configuring CI/CD pipelines, security configurations, and authentication configurations for new applications and new repositories anytime developers are ready to deploy the application. The screenshot below shows where the template repo can be selected and used to create a new repository.

Other Git providers might not have this feature, but the goal is to have a boilerplate template repository to use for all repositories. The repositories can be of three different types based on the functionality of the application

- API service

- Frontend App

- Background Jobs

The next strategy is to use modules in IaC

Use Modules for your IaC

Every IaC implementation has some reusable functionality that ensures the same code that needs to be used for identical operations is not written twice. In Terraform, they are called modules. A Terraform module can be structured in a way that all the components you need for your infrastructure are bundled in a single entity. This entity can be hosted remotely, referenced, and reused multiple times by passing parameters or variables to adjust it for specific use cases.

This Terraform module forms the basis of all infrastructure orchestration and management within your organization. This will ensure consistent configurations of all infrastructure components and proper integration of the different services the application needs to run efficiently. In the Terraform module repository, there are dozens of modules for different use cases. These modules, when implemented, save a huge amount of time to set up infrastructure and make it much easier to manage over time.

This automatically meets the principles of platform engineering, which preaches that infrastructure operations should have guardrails, golden paths, and security standards for every operation.

Reusable and Parameterized CI/CD Pipelines

Whether it is Github, Gitlab, or Circle CI, they all have some form of modularized pipeline configuration. This configuration helps to avoid pipeline configuration repetitions and “death to COPY and PASTE”. When there are hundreds of repositories to configure pipelines on, this configuration creates a template that is parameterized, reduces the number of lines of scripts in the individual repositories, and takes in unique application configurations via the parameters that have been configured. This reduces errors across the system, eliminates copy and paste of pipeline codes, and reduces errors.

Lastly, this also makes it possible to configure security standards, guardrails, and effective management of all pipelines to be used within the organization. For the different vendors, these are the names of this implementation for CI/CD.

Github: Reusable Workflows and Composite Actions

Gitlab: Gitlab Includes

CircleCI: Orbs

Bitbucket: Bitbucket Pipeline Pipes

Jenkins: Jenkins Shared Library

Each of these has the same goal but different syntaxes, but can be implemented similarly. With this type of configuration, we are sure that pipelines are easily reusable and can form part of the scripts when creating boilerplate code for developers.

Well-Architected Kubernetes Manifest

I wrote a piece where I explained what it means to have a Well-Architected Kubernetes cluster. But this is quite different. This is more of the Kubernetes Manifest used for your deployment. It needs to be cognizant of different aspects of an application to ensure its reliability, security, and operational management. I would prefer the use of Helm for the operational management of Kubernetes manifests because of its flexibility and scalability in different scenarios of deployment.

The next is the security configuration. Have you ensured the pod does not allow privilege escalation? Lastly, the reliability of the manifest file. I recommend that production apps have nothing less than 2 replicas, proper node spread, and well-configured rolling deployment strategies to avoid downtimes during a deployment.

These configurations ensure you eliminate manual work in managing your pods running in your Kubernetes cluster. Click on the link for a sample of a well-architected Kubernetes Deployment file.

When we have the Helm Chart ready, do we go ahead to make copies for every microservice? Is there any other way to manage Helm Charts and implement the DRY principle? The next point will speak to this

Single Deployment Source of Truth with Mono Helm Chart

The Mono Helm Chart is a concept I wrote about last year, how I implemented it, and the sample code I used for my implementation. The idea of the Mono Helm Chart is to create a single Helm Chart that can be reused across applications. Just like the CI/CD pipeline, where we have a template, it can be referenced and used in multiple repositories. The same principle is applied to the Mono Chart implementation. We have a single Helm Repo or package, and that single chart can serve for all application deployments.

This is the Github repo for the code which can be used and contributed to. It is its bare minimum, so it can be modified to your unique use case.

Efficient Feedback with Robusta on Slack

Feedback is one of the cores of DevOps. The logo of DevOps is an endless feedback loop. This ensures engineers and all stakeholders are constantly aware of the status of the system. The challenge with alerting most time is how to sift through the noise. So many monitoring systems, mostly when metrics are being monitored, send a lot of alerts that might not be relevant to everyone in the team. So these strategies can help you to configure proper alerting for your Platform System and can be easily managed over time

– Silence warnings and notice alerts

– Configure proper alert routing to relevant people based on job roles

– Use Synthetics Monitoring to get JIT (just in time) uni-dimensional notification

– Set an SLA for fixing recurring noisy alerts like (OOM issues, Disk Space issues, etc)

With these structures in place, it is easier to manage the alerting platform. Robusta is a wonderful tool that sits on top of Prometheus and AlertManager to give a good user experience for alerts. See more about Robusta here.

Easy-to-use Dashboards for Observability

What is the Infrastructure without Observability? Spend some time setting up some very user-friendly dashboards that make it easy for engineers to navigate and easily search for logs and metrics. Most time, the dashboards are not user-friendly, and it makes it hard for engineers to get what they are looking for on the dashboard. You do not want to be called upon every time an engineer needs to see logs to help them spool logs or navigate the dashboards.

Whatever Observability tool you decide to use, be it Amazon Cloudwatch, Graylog, LGTM stack, or Elastic stack. The most important goal is to make the dashboards user-friendly, easy to use, and navigate. This saves time when any member of the team needs to debug anything in the environment. I use Grafana for my Observability system, the LGTM stack to be precise, and the Grafana Dashboards library has some simple, easy-to-use Dashboard templates that anyone can easily integrate.

With all the systems well configured following the DRY principles, managing infrastructure becomes a “true DevOps” operation. Where Software Engineers are largely involved because they do not have to understand everything, but can work with a 10-line CI/CD pipeline, and a 15-line Terraform module to set up infrastructure and application deployment, even without a DevOps Engineer, because the core system to make this work has been properly built and structured. If anything goes wrong, the alerting systems and observability can give the developer quick insight into the behavior of the system.

The DevOps Engineer or Platform Engineer (whatever the label is) only manages the core platform integrations and ensures innovations continue around that sphere and not fixing pipelines or Terraform codes daily.

Conclusion

The IDP in Platform Engineering, where the P means porta,l is a layer on top of a well-structured and defined platform that is easy to make API calls to orchestrate operations.

So, before thinking about a portal, build a DRY, reusable platform layer first, with little human intervention, and then you are ready to slap a portal on that.