As the AI adoption and usage continue to grow and boom, there is a part of the system that is regularly talked about and not really explained in details. That part of the AI is the computational hardware required to make all our lovely LLMs to operations.

The ChatGPT, Amazon Q, Amazon Nova, Deepseek and the likes al depend on a core hardware component called the GPU. The GPU is an acronym for Graphics Processing Unit.

This component was commonly used in the gaming world. I remember playing computer games as a child, when my games where slow, an engineer would recommend adding a GPU to improve the speed of the game. This improves greatly the speed from rendering speed to game-play experience.

That special feature of a GPU is the same that is applied to Blockchain technology and AI. In basic terms, it is about computational complexity.

GPUs have higher computational complexity than CPU. Before going further to differentiate both of them, let us do a little review of the definitions of a CPU and a GPU

What is a CPU and a GPU

According to Wikipedia, a CPU is defined as

“A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary processor in a given computer.[1][2] Its electronic circuitry executes instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations.[3][4][5] This role contrasts with that of external components, such as main memory and I/O circuitry,[6] and specialized coprocessors such as graphics processing units (GPUs)”

whereas a GPU is defined as

“A graphics processing unit (GPU) is a specialized electronic circuit initially designed for digital image processing and to accelerate computer graphics, being present either as a discrete video card or embedded on motherboards, mobile phones, personal computers, workstations, and game consoles. After their initial design, GPUs were found to be useful for non-graphic calculations involving embarrassingly parallel problems due to their parallel structure. GPUs ability to perform vast numbers of calculations rapidly has led to their adoption in diverse fields including artificial intelligence (AI) where it excels at handling data-intensive and computationally demanding tasks. Other non-graphical uses include the training of neural networks and cryptocurrency mining. With increasing use of GPUs in non-graphical applications, mainly AI, the term GPU is also erroneously used to describe specialized electronic circuits designed purely for AI and other specialized applications.”

Components of a CPU

ALU (Arithmetic Logic Unit)

- Performs mathematical calculations (addition, subtraction, multiplication, division) and logical operations (AND, OR, NOT, XOR).

- Essential for executing instructions in applications and operating systems.

Control Unit (CU)

- Directs and manages the execution of instructions.

- Fetches instructions from memory, decodes them, and coordinates execution by sending control signals to other CPU components.

Registers

- Small, ultra-fast storage locations within the CPU used to hold temporary data and instructions.

- Includes:

- General-Purpose Registers (store intermediate values during processing)

- Instruction Register (IR) (holds the currently executing instruction)

- Program Counter (PC) (stores the address of the next instruction to execute)

- Accumulator (AC) (stores results of arithmetic and logical operations)

Cache Memory (L1, L2, L3)

- High-speed memory within the CPU that stores frequently accessed data and instructions.

- L1 Cache (fastest, closest to CPU core), L2 Cache (larger but slower), and L3 Cache (shared among multiple cores, improves efficiency).

Cores

- Independent processing units that execute instructions simultaneously.

- Modern CPUs have multiple cores (e.g., 4, 8, 16, or more) to improve multitasking and parallel processing.

Threads & Hyper-Threading

- A core can handle multiple threads (smaller units of a process).

- Intel Hyper-Threading (HT) or AMD Simultaneous Multithreading (SMT) allows a single core to handle two threads simultaneously, improving efficiency.

Clock & Clock Speed

- The Clock synchronizes CPU operations, measuring execution speed in GHz (e.g., 2.5 GHz to 5.5 GHz).

- A higher clock speed enables faster execution of instructions.

Instruction Set Architecture (ISA)

- Defines the set of machine-level instructions a CPU can execute (e.g., x86, ARM, RISC-V).

- Determines compatibility with software and operating systems.

Bus Interface (Front-Side Bus, Memory Bus, PCIe)

- System Bus (Front-Side Bus, FSB) connects the CPU to RAM and other components.

- PCIe (Peripheral Component Interconnect Express) connects the CPU to high-speed peripherals like GPUs and SSDs.

Memory Management Unit (MMU)

- Translates virtual memory addresses to physical addresses, managing RAM access and optimizing memory usage.

Floating Point Unit (FPU)

- Specialized processor for handling complex mathematical operations, especially for scientific and engineering calculations.

Thermal Management & Power Control

- Voltage Regulation Modules (VRMs) ensure stable power delivery to the CPU.

- Thermal sensors monitor temperature, enabling features like thermal throttling to prevent overheating.

- Works with heat sinks, fans, and liquid cooling systems to dissipate heat.

CPU Socket & Integrated Heat Spreader (IHS)

- The CPU Socket (e.g., LGA, PGA, BGA) connects the processor to the motherboard.

- The IHS (Integrated Heat Spreader) helps distribute heat for cooling.

Components of a GPU

CUDA Cores / Stream Processors: The fundamental processing units responsible for executing parallel computations. NVIDIA calls them CUDA cores, while AMD refers to them as Stream Processors.

Tensor Cores (for AI & Deep Learning): Specialized cores found in NVIDIA GPUs, optimized for matrix operations, significantly boosting AI and machine learning performance.

RT Cores (Ray Tracing Cores): Dedicated cores in NVIDIA RTX GPUs designed to accelerate real-time ray tracing for realistic lighting, shadows, and reflections in graphics rendering.

VRAM (Video RAM): Dedicated high-bandwidth memory (e.g., GDDR6, HBM2) used for storing textures, models, and frame buffers, enabling smooth rendering and processing of large datasets.

Memory Bus & Controller: Manages the transfer of data between the GPU cores and VRAM, influencing overall memory bandwidth and performance.

ROP (Raster Operations Pipelines): Responsible for finalizing rendered images by handling pixel output, blending, anti-aliasing, and depth testing.

TMUs (Texture Mapping Units): Process textures and apply them to 3D models, enhancing visual realism in games and rendering applications.

Shader Units: Execute shading calculations, determining how surfaces appear by computing lighting, reflections, and shadows.

PCIe Interface: Connects the GPU to the motherboard, using PCIe lanes for high-speed communication with the CPU and system memory.

Power Management & VRMs (Voltage Regulation Modules): Regulate power delivery to different components, ensuring efficient and stable performance under various workloads.

Cooling System (Fans, Heatsinks, Liquid Cooling): Dissipates heat generated by the GPU, preventing thermal throttling and maintaining optimal performance.

BIOS & Firmware: Controls low-level operations, including clock speeds, power limits, and thermal management.

Now that we have defined both of them, and the components that make up each of them lets us talk about the differences that exist between them, though some of it have been talked about in the definitions above

Core Architecture: CPUs vs GPUs

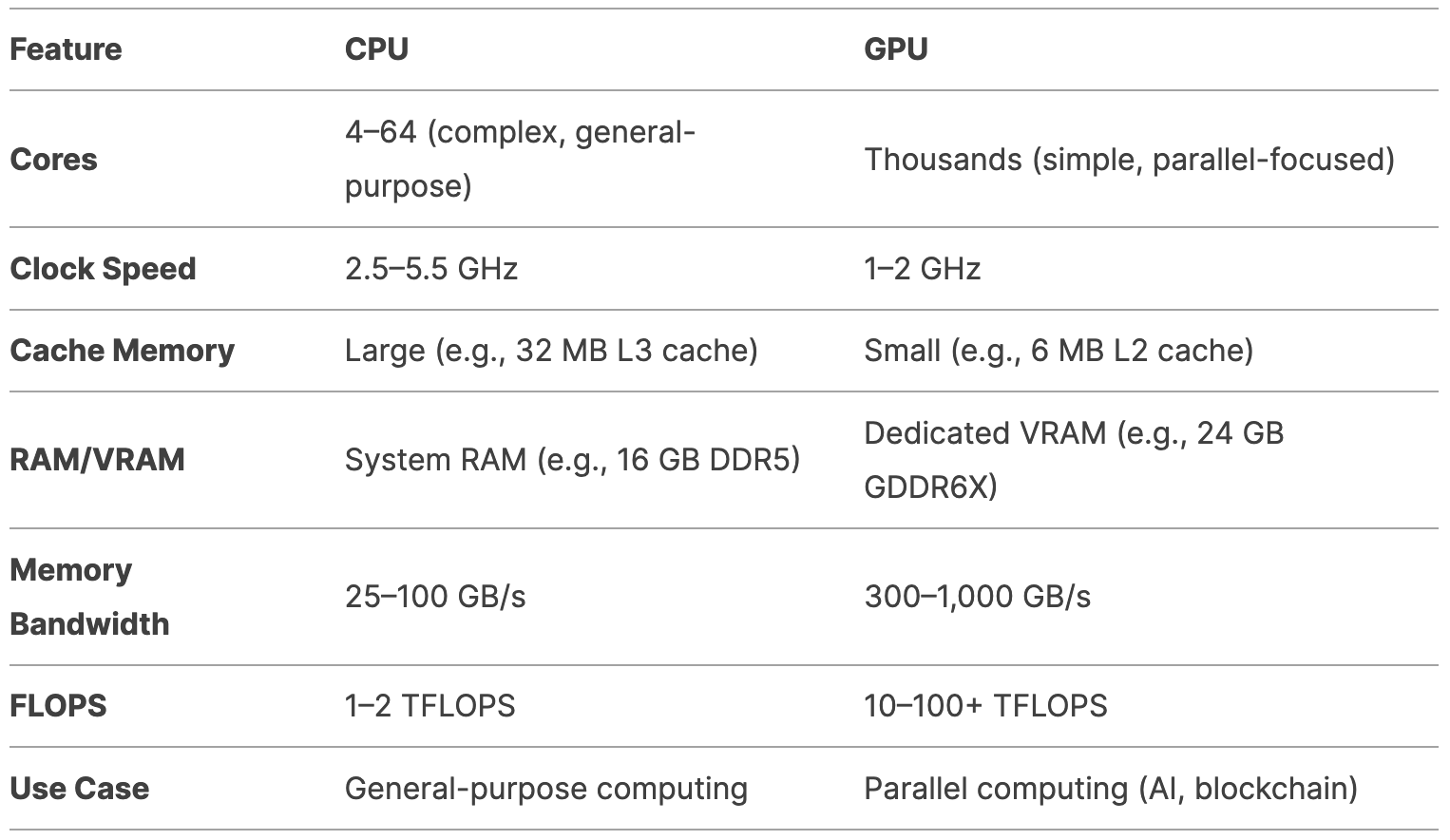

CPUs are designed for general-purpose computing, excelling at sequential tasks, complex decision-making, and running operating systems and applications.

Modern CPUs typically feature 4 to 64 high-performance cores, with clock speeds ranging from 2.5 GHz to 5.5 GHz (or higher with overclocking). They utilize large cache memories (L1, L2, and L3) to reduce latency, with high-end models offering up to 32 MB of L3 cache. Multi-threading technologies, such as Intel’s Hyper-Threading, enable each core to handle multiple threads, enhancing efficiency.

CPUs rely on system RAM (e.g., DDR4 or DDR5) with bandwidths between 25 GB/s and 100 GB/s. In contrast, GPUs are optimized for parallel processing, making them ideal for graphics rendering, AI, and blockchain workloads.

They feature thousands of smaller cores, such as the NVIDIA RTX 4090’s 16,384 CUDA cores, designed for parallel computation. Although GPUs operate at lower clock speeds (typically 1 GHz to 2 GHz), their high core count compensates for this limitation.

They utilize dedicated high-bandwidth video memory (VRAM), such as GDDR6 or HBM2, with bandwidths exceeding 1 TB/s in high-end models like the NVIDIA A100, which boasts 40 GB of HBM2 memory.

Additionally, GPUs efficiently handle millions of threads simultaneously, making them well-suited for parallel workloads.

Computational Complexity: Why GPUs Excel

Parallelism

CPUs are optimized for sequential tasks, where one task is completed before moving to the next. This makes them ideal for tasks like web browsing, office applications, and gaming.

GPUs, on the other hand, are designed for parallelism. They can break down large tasks into thousands of smaller ones and process them simultaneously. This makes them ideal for tasks like:

Blockchain Mining

Mining cryptocurrencies like Bitcoin or Ethereum involves solving complex mathematical problems (hashing). GPUs can perform trillions of hash calculations per second (TH/s), far outperforming CPUs.

AI and Machine Learning

Training neural networks involves performing matrix multiplications and other linear algebra operations on massive datasets. GPUs can process these operations in parallel, significantly reducing training times.

Floating-Point Performance

GPUs are optimized for floating-point operations (FLOPS), which are critical for scientific computing, AI, and graphics rendering. For example: A high-end CPU like the AMD Ryzen 9 7950X delivers around 1-2 TFLOPS (trillion FLOPS) vs A high-end GPU like the NVIDIA RTX 4090 delivers over 80 TFLOPS.

Memory Bandwidth

GPUs have significantly higher memory bandwidth compared to CPUs. For example: A CPU with DDR5 RAM might have a bandwidth of 100 GB/s vs A GPU with GDDR6X VRAM might have a bandwidth of 1 TB/s.

GPUs for High-Computational Tasks

Blockchain mining relies on solving cryptographic puzzles, such as SHA-256 for Bitcoin, which require massive parallel processing power. GPUs excel at this task, performing trillions of hash calculations per second, whereas CPUs typically manage only millions. Similarly, artificial intelligence and machine learning workloads, particularly deep neural network training, demand extensive matrix multiplications and linear algebra operations on large datasets. GPUs accelerate these computations by processing them in parallel, significantly reducing training times—what might take weeks on a CPU can often be completed in hours or days on a GPU. Additionally, GPUs were originally designed for graphics rendering, where they process millions of pixels simultaneously, making them essential for gaming, video editing, and 3D rendering.

The following figure summarizes the technical difference and capacities between a CPU and a GPU.

Conclusion

Both CPU and GPU work together to give an amazing experience in gaming, blockchain and now AI. The innovations mades by companies like Nvidia and other GPU makers will continue to push the frontiers of the possibilities of GPU and thereby making the software running on top of them evolve really fast with new possibilities. The future indeed is going to to be fascinating. Do you want to go deeper into the GPUs and get more insights into the difference from the inside out ? Watch this video that goes in-depth into the components of a GPU