There are numerous frameworks and SDKs available for building AI Agents, and most of them are based on the Python Programming Language. This means basic knowledge of programming and the Python programming language is required to utilize these agents. What if there is another way to use declarative scripting that does not require knowledge of Python or any other programming language to build models, and it is also easy and fast to implement, using the principles of containerization as its foundation and Docker as its cornerstone?

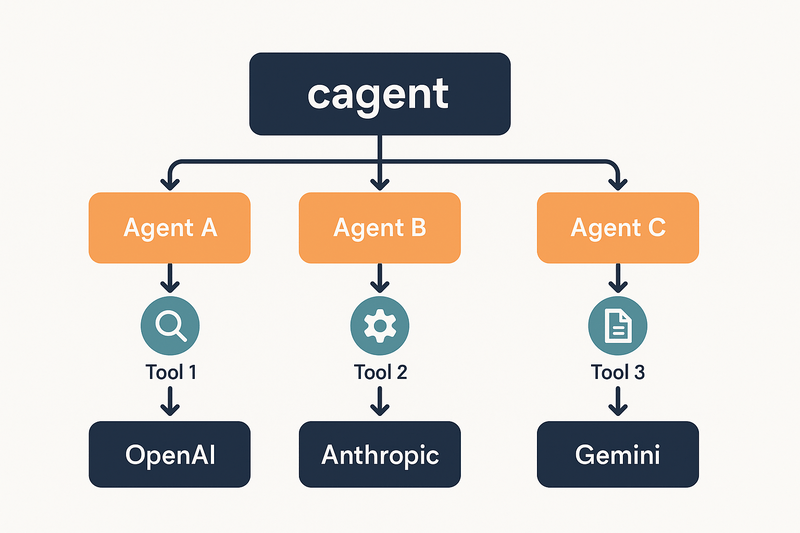

Enter cagent: an open-source, customizable multi-agent runtime that makes it easy to build, orchestrate, and run teams of AI agents. Whether you want a simple assistant or a full team of tool-powered virtual experts, cagent helps you get there with minimal friction.

In this long-form article, we’ll dive into:

- What cagent is and why it matters

- Its key features and architecture

- How to get started with your first agent

- How to empower agents with tools via the Model Context Protocol (MCP)

- Real-world use cases of multi-agent teams

- Advanced features like Docker Hub integration and local model support

- Why multi-agent systems are shaping the next phase of AI

By the end, you’ll have a clear picture of how cagent can fit into your AI workflows and why it’s one of the most exciting projects in the AI ecosystem today.

✨ What is cagent?

At its core, cagent lets you build intelligent agents using simple YAML files. Each agent has:

- A model (e.g., OpenAI GPT, Anthropic Claude, Google Gemini, or local models).

- A description (what it is, what it does).

- An instruction (how it should behave).

- An optional toolset (extensions that give it real-world powers).

Once described, you can run these agents directly in your terminal or even share them as containerized packages. The philosophy behind cagent is simple: make it dead easy to build and run a team of AI experts without requiring heavy engineering.

🎯 Why Multi-Agent Systems?

Before diving deeper into cagent, let’s step back and understand the why.

Traditional single AI agents are powerful but limited. Imagine asking one AI to search the web, summarize research papers, write Python code, and save results to a file. It might manage some of this, but the complexity grows fast, and accuracy often drops.

Now, imagine instead:

- A search agent that specializes in retrieving data.

- A summarization agent that condenses information.

- A coding agent that handles programming tasks.

- A file agent that saves results.

A coordinator agent orchestrates these specialists, breaking down the task, assigning subtasks, and combining results.

That’s the power of multi-agent AI systems and exactly what cagent enables.

🔑 Key Features of cagent

cagent is not just another AI wrapper. It’s a thoughtfully designed runtime with features that make it uniquely powerful:

- 🏗️ Multi-agent architecture

Build single agents or full teams that coordinate tasks. - 🔧 Rich tool ecosystem

Extend agents with tools via the Model Context Protocol (MCP), enabling access to search engines, file systems, APIs, and more. - 🔄 Smart delegation

Agents can automatically delegate tasks to the most suitable agent in the team. - 📝 Declarative YAML configuration

Define agents in just a few lines of YAML instead of hundreds of lines of code. - 💭 Built-in reasoning tools

Comes with “think”, “todo”, and “memory” features for advanced problem-solving. - 🌐 Multiple AI providers

Works seamlessly with OpenAI, Anthropic Claude, Google Gemini, and local runtimes like Docker Model Runner (DMR). - 📦 Push & Pull agents

Share agents as OCI images on Docker Hub usingcagent pushandcagent pull. - 🚀 Easy onboarding

With prebuilt binaries for all major OSes, setup takes minutes.

⚡ Getting Started with cagent

Step 1: Installation

Download prebuilt binaries from the project’s GitHub releases page. For example, on Linux:

chmod +x /path/to/downloads/cagent-linux-amd64

mv /path/to/downloads/cagent-linux-amd64 /usr/local/bin/cagent

Now cagent can be run from anywhere on your terminal.

Step 2: Set Your API Keys

Depending on your provider, set environment variables:

export OPENAI_API_KEY=your_api_key_here

export ANTHROPIC_API_KEY=your_api_key_here

export GOOGLE_API_KEY=your_api_key_here

Step 3: Create Your First Agent

Make a file called basic_agent.yaml:

agents:

root:

model: openai/gpt-5-mini

description: A helpful AI assistant

instruction: |

You are a knowledgeable assistant that helps users with various tasks.

Be helpful, accurate, and concise in your responses.

Run it:

cagent run basic_agent.yaml

And that’s it — you’ve got your first cagent agent running!

🔧 Improving Agents with MCP Tools

The real magic of cagent lies in tooling.

Using the Model Context Protocol (MCP), you can equip agents with external capabilities—search engines, file systems, APIs, or any custom service.

Here’s an example where we give our agent DuckDuckGo search and file system read/write capabilities:

agents:

root:

model: openai/gpt-5-mini

description: A helpful AI assistant

instruction: |

You are a knowledgeable assistant that helps users with various tasks.

Be helpful, accurate, and concise in your responses. Write results to disk.

toolset:

- type: mcp

ref: docker:duckduckgo

- type: mcp

command: rust-mcp-filesystem

args: ["--allow-write", "."]

tools: ["read_file", "write_file"]

env:

- "RUST_LOG=debug"

Now this agent can:

- Search the web.

- Save results locally.

- Retrieve saved files later.

Suddenly, your agent becomes far more useful in real-world workflows.

🤝 Multi-Agent Teams in Action

cagent supports multi-agent orchestration—where one agent coordinates and delegates tasks to others.

Example:

agents:

root:

model: claude

description: "Coordinator agent"

instruction: |

Break user requests into tasks, delegate to helper, and combine results.

sub_agents: ["helper"]

helper:

model: claude

description: "Task assistant"

instruction: |

Complete tasks assigned by the root agent thoroughly and accurately.

This setup enables division of labor. The root agent manages workflows, while the helper focuses on execution.

Imagine scaling this up to:

- A researcher agent for academic papers.

- A developer agent for coding.

- A summarizer agent for reporting.

- A coordinator agent that glues it all together.

That’s a virtual AI team at your fingertips.

🧑💻 Advanced Features for Power Users

cagent is not just for simple YAML experiments. It includes advanced features for real-world development:

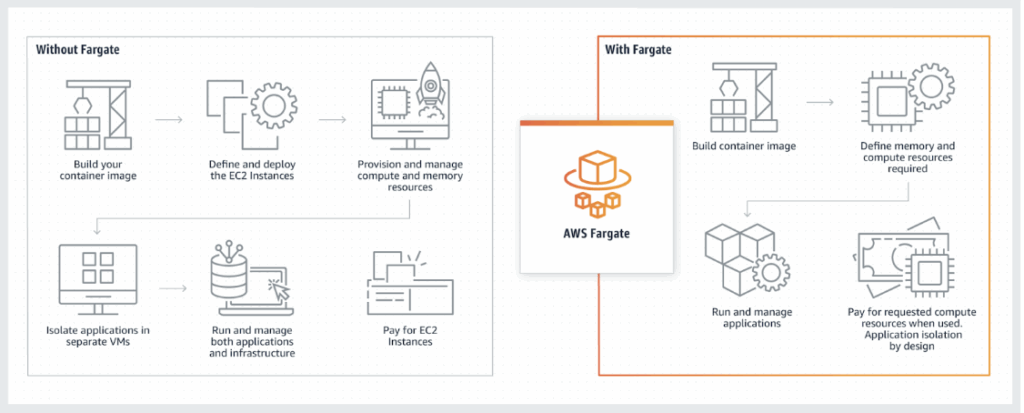

- Local Model Support with DMR

Run models locally via Docker Model Runner (e.g., Llama.cpp, Qwen, Gemma).models: local-qwen: provider: dmr model: ai/qwen3 max_tokens: 8192 provider_opts: runtime_flags: ["--ngl=33", "--repeat-penalty=1.2"] - Quick Generation with

cagent new

Generate agents or teams from a single prompt:cagent new --model openai/gpt-5You’ll be guided through an interactive process.

- Push & Pull Agents via Docker Hub

Share agents easily:cagent push ./my_agent.yaml mynamespace/myagent cagent pull creek/pirate - Dogfooding for Development

The cagent team even uses an AI Golang developer agent to help improve cagent itself—an amazing example of self-hosted innovation.

🌍 Real-World Use Cases for cagent

Here are some practical ways developers can use cagent today:

- Research Automation: A team of agents that search, summarize, and save academic papers.

- DevOps Assistants: Agents that read system logs, suggest fixes, and run infrastructure commands.

- Content Creation: Teams that brainstorm ideas, generate drafts, and polish writing.

- Data Pipelines: Agents that fetch data, transform it, and push it into storage.

- Learning Companions: Multi-agent tutors that explain concepts, quiz you, and suggest exercises.

In all these cases, cagent reduces complexity by making orchestration declarative and reusable.

🔮 Why cagent Matters for the Future

As AI moves into the era of agentic workflows, runtimes like cagent become foundational. Here’s why it matters:

- Scalability: One agent is powerful. A team of agents is unstoppable.

- Reusability: YAML definitions can be shared, versioned, and reused across projects.

- Extensibility: New tools and providers can be plugged in without rewriting agents.

- Collaboration: Encourages modular AI design—specialists working together, just like humans.

In short, cagent represents the next step in operationalizing AI at scale.

🏁 Conclusion

cagent is an early but powerful framework that makes this vision practical. From single assistants to complex multi-agent teams with web search, file systems, and APIs, cagent helps you experiment, build, and share your agentic workflows. If you’re serious about AI development, cagent is a project worth trying today.

👉 Download it, create your first YAML agent, and see just how far the multi-agent future can take you.