On October 19–20, 2025, AWS experienced a widespread service disruption in its largest and oldest region, US East (N. Virginia). The incident — which lasted several hours and impacted major services including EC2, DynamoDB, EKS, and CloudFormation reminds us that even the most mature cloud platforms can experience cascading failures. In this post, we’ll unpack what went wrong, how AWS recovered, and what cloud engineers can learn from the event.

The Core of the Issue: A DNS Outage in DynamoDB’s Control Plane

The incident began inside Amazon DynamoDB’s control plane, the internal system that handles metadata operations such as table creation, configuration updates, and replication.

At around 09:15 AM PDT on October 19, a DNS configuration change triggered a chain reaction that prevented control plane requests from correctly resolving to their internal endpoints.

While DynamoDB’s data plane (reads and writes) continued working, the control plane became unreachable, which affected dependent AWS services that rely on DynamoDB for state management or configuration storage.

Among these were:

- AWS Lambda

- AWS CloudFormation

- Amazon EKS

- Amazon EC2 Auto Scaling

- AWS Identity and Access Management (IAM)

Each of these services depends in part on DynamoDB for storing operational metadata, meaning when the control plane DNS failed, these systems started to degrade one after another.

How the Problem Escalated Across AWS Services

As DNS lookups failed, retry storms began. Control planes across several services repeatedly attempted to reconnect, generating high CPU load on networking components and consuming significant Route 53 resolver capacity.

By mid-day, the issue had spread to:

- Elastic Load Balancing (ELB)

- ECS and EKS control planes

- CloudFormation stack operations

- New EC2 instance launches and Auto Scaling events

Customers reported being unable to create or update infrastructure, with automation pipelines hanging indefinitely.

AWS engineering teams isolated the issue to the DynamoDB DNS layer and began rolling back the offending configuration. However, the rollback required full propagation through Amazon’s internal DNS hierarchy, which extended recovery time.

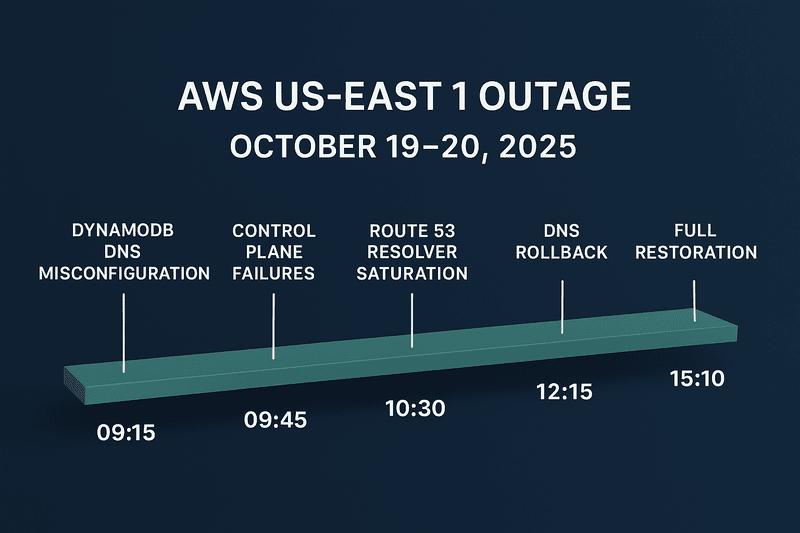

🕒 Recovery Timeline (Simplified)

| Time (PDT) | Event |

|---|---|

| 09:15 | DNS misconfiguration deployed in the DynamoDB control plane |

| 09:45 | Control plane failures begin; EC2 and EKS start timing out |

| 10:30 | Route 53 resolver saturation observed; retry storms continue |

| 12:15 | DNS rollback initiated by AWS engineering |

| 13:45 | Internal DNS propagation completes across clusters |

| 14:30 | Dependent services begin auto-recovery |

| 15:10 | AWS declares full restoration of DynamoDB and dependent services |

Total incident duration: ~6 hours

AWS’s Response and Improvements

In the post-incident analysis, AWS committed to several remediation measures:

- DNS Change Verification Layer – AWS is adding new safety checks that validate DNS configuration changes before global rollout, ensuring they don’t affect service-to-service communication.

- Increased Resolver Isolation – Route 53 resolver clusters will now isolate per-service DNS traffic to prevent retry storms from cascading.

- Enhanced Control Plane Fault Isolation – The DynamoDB control plane is being redesigned to fail independently across Availability Zones.

- Improved Monitoring and Alarms – More granular alarms for DNS propagation delays and abnormal retry patterns.

- Better Communication – AWS acknowledged that their status updates were delayed and is improving automation for faster public updates.

Lessons Learned for Cloud Engineers

Even though this was an AWS-managed failure, it contains valuable lessons for anyone architecting cloud workloads.

1. Design for Control Plane Dependencies

Many services (EKS, Lambda, ECS, etc.) depend indirectly on other AWS control planes.

Lesson: Even if your application uses highly available data planes, failures in the control plane can stop deployments, scaling, or configuration updates. Always include manual fallback runbooks and cached configurations for critical operations.

2. Minimize Cross-Service Dependencies

If one managed service (like DynamoDB or S3) experiences control plane issues, others may be affected.

Lesson: Isolate workloads across services or accounts where possible. Use decoupled patterns (like queues or event buses) rather than tight service chaining.

3. Implement DNS Resilience

DNS remains one of the most fragile yet critical layers in cloud systems.

Lesson: Configure local DNS caching, and ensure your apps gracefully handle resolution failures with exponential backoff rather than aggressive retry loops.

4. Chaos and Dependency Testing

Real resilience isn’t proven until tested.

Lesson: Simulate partial service failures (e.g., DNS delays, API timeouts) in pre-production to see how your system behaves when core AWS components falter.

5. Operational Awareness During Outages

When AWS has an incident, visibility is key.

Lesson: Subscribe to AWS Health Dashboard, follow @AWSSupport on X (Twitter), and have an incident response protocol ready. Don’t wait for official resolution — shift workloads or scale back automation if possible.

Conclusion

A capacity shortage or regional failure didn’t cause the AWS us-east-1 outage of October 2025 — it was a reminder that even the most mature distributed systems can falter from a single configuration change.

For cloud engineers, this event highlights the importance of redundancy, observability, and dependency awareness. No cloud provider is immune to cascading failures, but understanding how and why they happen helps us build stronger, more resilient systems.